Traditional clustering solutions focus on performing clustering tasks purely server-side. The browser only possesses one URL (one IP address) after all, so there is not much we can do (well, there is, but more on this later). Usually, the intent behind clustering is twofold:

- If one server computer crashes, the server-side setup is configured to redirect the traffic to another server computer. This is called failover.

- If one server computer is under heavy load, another server computer can share the load. This is called load-balancing.

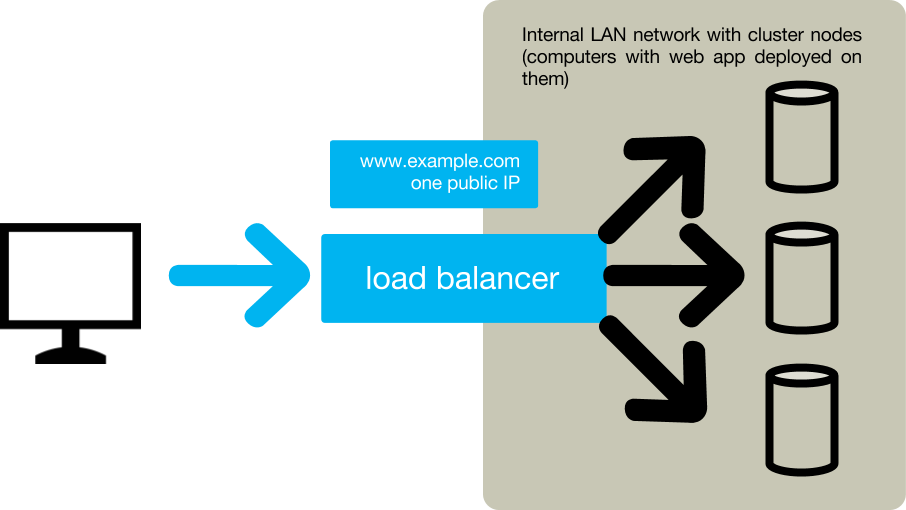

Naturally, there must be some network gizmo in front of those servers - all incoming traffic is routed to a single IP by the browser after all, so there must be a special computer acting as a load balancer present there. This load balancer talks to the servers, monitors their load (and whether they are alive) and passes the traffic through to the least loaded server. Usually, this is Apache with mod_proxy or some other software-based solution; more expensive setups take advantage of hardware load balancers. The load balancer should have the following properties:

- The load balancer must be extremely resilient to failures - it is a so-called single point of failure after all, that is, when it crashes, it’s over and the whole cluster is unreachable. There is a technique where two computers compete for the same IP - only one succeeds and the second one just waits until the first one crashes, then takes over the IP.

- Different servers hold different http session objects in-memory. If the load balancer would just randomly route requests, this would cause random modifications of random sessions. Since copying a session throughout the cluster after every request is not cheap and would require cluster-wide locking, another approach is usually taken: when the user logs in, the load balancer picks a server and from now on it will route the traffic to that very server only.

The trouble with server-side clusters

Unless you are an administrator guy trained to set up a cluster of a particular web server, the cluster is not easy to set up (and most importantly - test, so that you gain confidence that the cluster actually works and performs its duties, both failover-wise and load-balancing-wise). Even the simplest options of having a group of JBosses with a special RedHat-patched Tomcat as a load-balancer (so that it can talk to JBosses and monitor them for load) takes some time to set up. Not to mention testing - often it is easier to just take the leap of faith and hope the cluster works as expected ;-)

So, for small projects clusters are often overkill; not to mention that there is this single-point-of-failure (load-balancer), unless you do an even more complex setup of having two load-balancers competing for one IP address.

What about other options?

Is it possible to get the failover/load-balancer properties some other way? How about if the browser took the role of load balancing? Obviously this is a great single-point-of-failure - when the browser crashes, it is clear to the user that she can’t browse the web until she starts the browser again. Naturally, the browser cannot possibly know the load on the servers so it can’t act as an informed load-balancer, but what about the browser doing the failover at least?

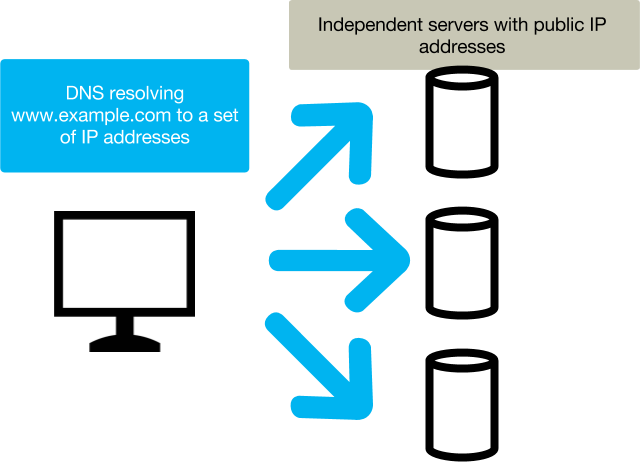

It is actually possible to bind multiple IP addresses to a single DNS record. The DNS server may then shuffle or rotate the IP list (so-called DNS round-robin), so that different browsers will try different IPs in a different order. With thousands of browsers, this works surprisingly well and the load is distributed more-or-less evenly amongst the servers. Looks great, right? Unfortunately, it isn’t. The trouble is the session. The browser is free to switch IPs often, even on every request, and if the session is not distributed amongst the computers in some way, then this approach won’t work. And distributing a session requires a server-side cluster, which we wanted to avoid. So, any other solutions?

Maybe we could implement a load-balancer inside the browser. How? JavaScript.

JavaScript-based load-balancer

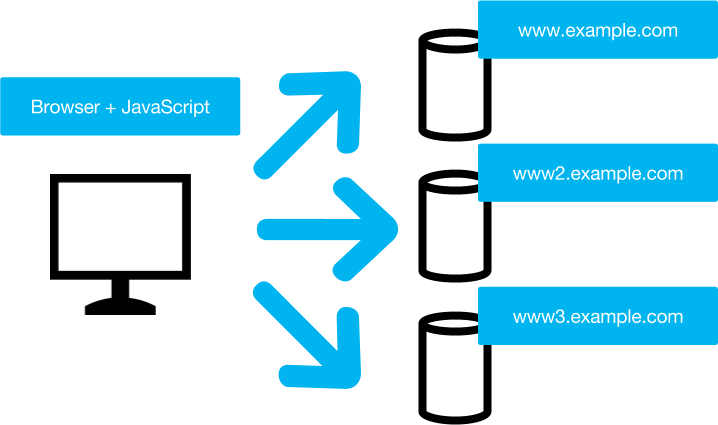

The idea is simple. Publish your servers under multiple DNS names, e.g. www.service.com, www1.service.com, www2.service.com, etc. Then, once the browser visits www.service.com, along with the page it will download a special javascript code containing a list of all the servers.

Then, in case of server crash, just let that javascript code redirect to another DNS. Simple, right? Let’s discuss this a bit:

- This approach will force the server to always communicate with a single server, thus avoiding the necessity to distribute the session. Of course, when the server crashes, the session is lost and there is nothing we can do about it. However, clusters also tend to have trouble replicating sessions big enough (and with Vaadin it is easy to have a session as big as 200kb-1MB and more per user). It is usual that when fail-over happens, the session on the spare node may not be up-to-date, but in fact may be several minutes old, depending on the cluster configuration. This may be acceptable though; but often it is tolerable to lose the session in the event of crash.

- This client-side approach is so easy to test - the testing itself is almost not required. The whole load-balancer is implemented and tested by Vaadin; you only need to supply a valid set of URLs.

- The primary page is always downloaded off the www.service.com, thus creating a heavy load on that server. This could be remedied - if a random redirect is performed by the javascript load-balancer initially, to move away from the main server, this will distribute the load evenly.

- Failover is performed by the browser, which is maintained by the user. Win!

- This approach is not so simple to use in a regular web app, but it is so totally simple to use with Vaadin it is almost a miracle. It requires no specialized infrastructure server-side; you can deploy different web servers on different OSes if you so wish; you can deploy the application cluster-wide any way you see fit - ssh, Puppet, samba share, anything.

- The session does not need to be serializable; saving the session to disk can be turned off, thus improving performance.

So, how is this implemented in Vaadin? In Vaadin there is a reconnection dialog, appearing every time a connection is lost. We have just equipped the dialog with the failover code, so that in case of server crash the dialog will automatically redirect to the next server. This is exactly what the following extension does: https://vaadin.com/directory#!addon/failover-vaadin Just include the extension in your app and you are all set. Now *this* was simple, huh? You can watch the extension in action in the following Youtube video. The video shows launching of four independent Jetty instances in Docker on four different ports, 8080-8083.

There are obvious drawbacks to this solution. If the www.service.com is down, there is no way to download the JavaScript with the additional server list. So this kind of becomes single-point-of-failure. This could perhaps be remedied by employing the offline mode in HTML5, but this needs investigation. But if this was possible, the browser would only need to download the JavaScript once; since then it would be present in your browser, and will thus be able to find a live server even when the primary one is dead.

Downsides of browser-side load-balancing and failover:

- The JavaScript load-balancer can not possibly know whether the cause of the connectivity loss is the crash of the server or the loss of the network. It may guess - if you get a connection rejected within 1 second, then probably the server has crashed. If you have connection timeout, then the network may be down or the server may be under a heavy load. So, at some point it needs to ask the user to either reconnect to a spare server, or keep waiting. This needs investigating.

- DDOS attack. There is no load-balancer computer trained to divert DDOS; also DDOS may target and overload individual servers, not completely trashing them but making them really slow to respond. It might be possible to prepend the webapp with some sort of DDOS Filter though.

So, if you are willing to sacrifice the session in case of crash, this solution may be perfect for you.